Since I started writing substack letters, people with different backgrounds have asked me many times my viewpoints of artificial intelligence. “How much can an AI do?” “Where do you see AI will evolve to in the future?” “Do you think AI will take over human beings?” Though I don’t have clear answers to those questions, I think it’s necessary to take a broader look at the image of intelligence. Is there a clear boundary of intelligence between humans and machines?

Aristotelian

Following the tradition, let’s begin with the big name Aristotle, who started to think about where humans stand among the species around 350 BCE.

In his influential treatise Περὶ Ψυχῆς (Peri Psychēs), in English On the Soul, Aristotle raised the “three souls(psyches)” theory. There are three different souls: vegetal, animal, and rational. Plants have the capacity for nourishment and reproduction, the minimum that must be possessed by any kind of living organism. Animals have, in addition, the powers of sense-perception and self-motion. Humans have all these as well as intellect. More precisely, the hierarchy of capabilities looks like this:

Organized structure —> ability to absorb —> growth —> reproduction —> appetition —> sentience —> imagination —> desire, passion, wish —> pleasure and pain —> locomotion —> thinking or mind.1

Apparently, Aristotle thinks intellect comes from thinking or mind which makes humans different. The rational part he argues is, “we have no evidence as yet about mind or the power to think; it seems to be a widely different kind of soul, differing as what is eternal from what is perishable; it alone is capable of existence in isolation from all other psychic powers. All other parts of the soul, it is evident from what we have said, are, in spite of certain statements to the contrary, incapable of separate existence [...].”

This quote seems to align with Descartes’ mind-body dualism that the nature of body and mind (soul here) are totally different and therefore it is possible for one to exist without the other. However, the essence here is it gives a sense that mind/selfhood is unique to humans and imperishable, standing on the top of the hierarchy of capabilities. Another interesting notice is in Aristotle’s viewpoint, imagination, desire, passion, wish are all independent of intellect.

While pre-scientific, Aristotle, the keen observer and an original thinker was massively influential in all that followed the West (ruling the field of psychology until the 19th century). Since then, people have been thinking about hierarchies of being among species, the different forms of intelligence, even the hierarchy of intelligence…

Evolutionary

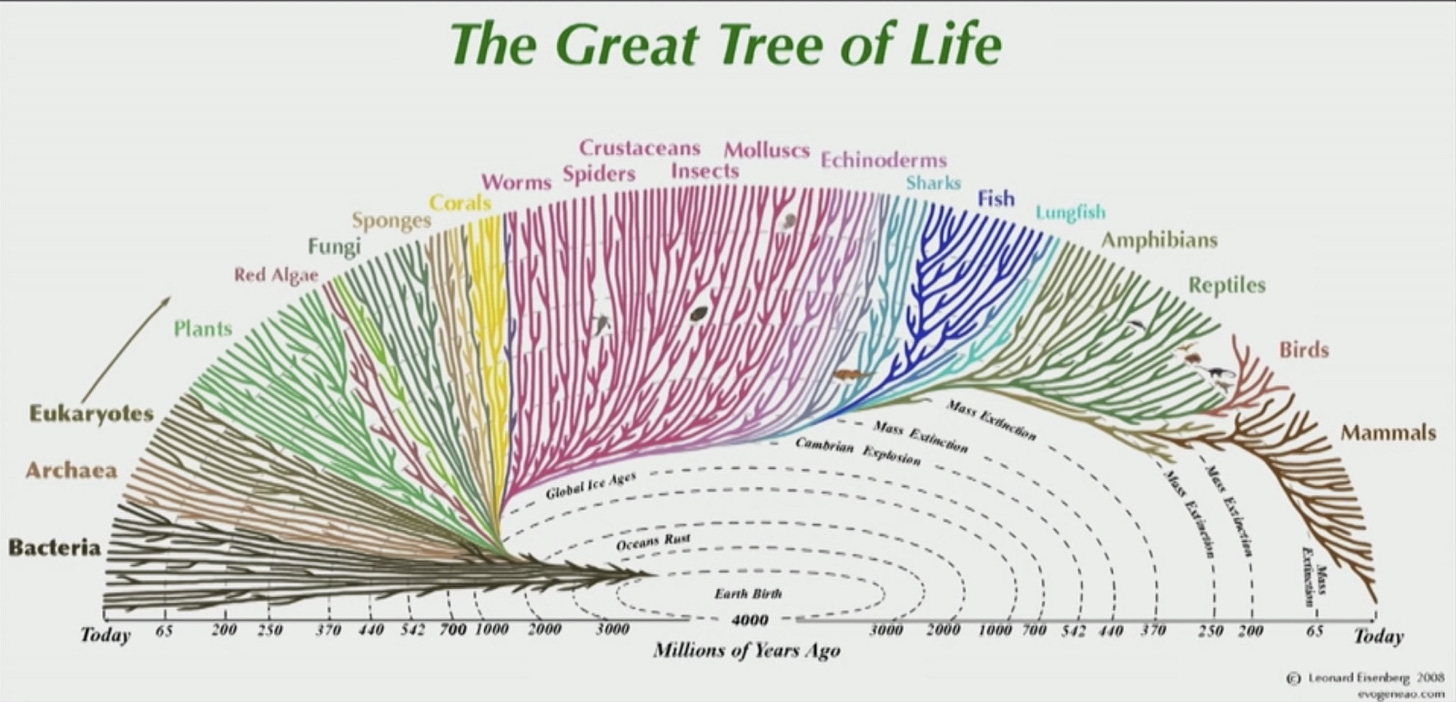

The picture above is called The Great Tree of Life. It displays a great visualization of lives on the planet on a scale of millions of years. The complexity of the tree increases and each branch gets more detailized as time expands. I see in this wisdom behind the growing tree a sort of evolutionary intelligence. The idea is all species have evolved behaviors, from bacteria to mammals, by natural selection. The behaviors the insects and plants have developed like cocooning or photosynthesis - it is hard to really understand the intricacy to argue that’s not a kind of intelligence. In some way, we can think of evolution as an R&D process: research and development, a design process exploiting information in the environment to create, maintain and improve the design of things.

This evolution might be smart, smarter than all we think, but an individual organism doesn’t learn. This intelligence is an end product that happens on an evolutionary time scale, and time with no doubt adds another layer of complexity. It is foresightless and extremely costly and slow.

Reinforcement learning

Now let’s take a closer look at one branch of the great tree of life - ant colony. In the natural world, ants of some species wander randomly, find food, return to their colony while laying down pheromone trails. If other ants find such a path, they are likely not to keep traveling at random, but instead to follow the trail, returning and reinforcing it if they eventually find food. Over time, however, the pheromone trail starts to evaporate, thus reducing its attractive strength. The more time it takes for an ant to travel down the path and back again, the more time the pheromones have to evaporate. A short path, by comparison, gets marched over more frequently, and thus the pheromone density becomes higher on shorter paths than longer ones. The overall result is that when one ant finds a good (short) path from the colony to a food source, other ants are more likely to follow that path, and positive feedback eventually leads to many ants following a single path.

Doesn’t this method feel familiar? It also has a scientific name - reinforcement learning. There are many ants colony-based reinforcement learning algorithms, inspired by similar ideas. Basically, rewards and punishments: either reinforce or create an inversion in response to certain behaviors. This is the simplest learning an induvial animal can act.

The learning behavior often reminds me of one eminent philosopher Niccolo Machiavelli’s best-known book, The Prince. The main premise of the argument in the Prince is that all people behave all the time in a completely self-interested manner - they are motivated by the desire for gain and fear of punishment.

Internal simulation

Moving forward, let’s put eyes on some cuter creatures: cats. When we look at a cat about to make a leap in the room, perhaps from a bookshelf to the sofa, we’ll notice it’s hunkering down and looking. And it seems like some gears are turning in the cat’s head and people might wonder what’s going on? The simplified answer is that the cat is doing some sort of internal simulation. It’s imagining how leaping is going to work, how its muscles are going to tense, how much does it need to stretch its front paws, can it make a leap or not …. Certainly, this is not just about reinforcement learning, not just about reward and punishment. I call it internal simulation intelligence. The animal simulates a variety of different solutions based on their understandings of the environment and then chooses the top one.

Someone might argue that the cat could be trying out different variations of jumps in its head and it’ll become reinforcement learning internally and do less simulation for the next round. But the point is, the training dataset is massively augmented, relative to what it experiences in the real world. The AlphaGo system also uses internal simulations to pick the optimized steps.

Active Perception

Internal simulation seems pretty smart already, right? However, when we solve a problem and make a decision, we don’t only rely on passive acceptance of stimuli or simulation, but an active process involving memory and other internal processes. This is called active perception named by a British psychologist, Richard Gregory.

Perception is a constructive process that relies on top-down processing. A lot of information reaches our eyes, but much is lost by the time it reaches the brain (Gregory estimates about 90% is lost). Therefore, the brain has to guess what a person sees based on past experiences. We actively construct our perception of reality. Richard Gregory proposed that perception involved a lot of hypothesis testing to make sense of the information presented to the sense organs: sensory receptors receive information from the environment, which is then combined with previously stored information about the world which we have built up as a result of experience.

That still sounds so abstract, let’s do a fun exercise here. What do you call this thing below?

It is a bicycle.

How do you define a bicycle? If you are a good old-fashioned programmer, you would say that it’s the thing that got pedals and two wheels and you ride it. But, what if it has training wheels? Then it has 4 wheels but still a bike. What if it has a trailer in the back, still a bike. What if it’s a motorcycle? Okay, the motorcycle isn’t called a bicycle. What about an electric bike, what about a tandem bike?

Moreover, anybody who goes to a modern art museum is going to think these images below are bicycles, even though they violate any possible logical rules that would define bicycles.

Take another story when this active perception is used in real-world decision-making. The phrase [I know it when I see it] was used in 1964 by United States Supreme Court Justice Potter Stewart to describe his threshold test for obscenity in Jacobellis v. Ohio. In explaining why the material at issue in the case was not obscene under the Roth test and therefore was protected speech that could not be censored, Stewart wrote: “I shall not today attempt further to define the kinds of material I understand to be embraced within [the] shorthand description [“hard-core pornography”], and perhaps I could never succeed in intelligibly doing so. But I know it when I see it, and the motion picture involved in this case is not that.”

This is exactly why good old-fashioned AI failed. No matter what kinds of features you put in, you can’t write a set of rules for pornography. Classical computing is all logical propositions, but “ideas” in our neural nets aren’t logical propositions. They are learned invariants. A similar statement was brought up by Warren McCulloch in 1950 that “it is clear that we cannot tell what kind of thing we must look for in a brain when it has an idea, except that it must be invariant under all those conditions in which that brain is having that idea. So far we have considered particular hypotheses of cortical function. They are almost certainly wrong at some point. [...]”

The same learned-invariant principle goes a lot deeper than sensory perception. Even though “explainability” is still a problem, artificial neural nets that learn invariants (A neuron that maintains a high response to its feature despite certain transformations of its input) are now rivaling and even surpassing human brains at many previously impossible-to-compute tasks. Back to porn detection, Google engineer Alexander Mordvintsev developed a computer vision program called DeepDream using a perceptual convolutional neural network.

Cultural transmission

Although a lot of neuroscience and ML developed first in the context of vision, similar debates happening and conclusions were reached in other domains like languages…

The near parallel between human languages and formal grammars must have been hard to resist in the 1950s. The linguistic theory raised by Noam Chomsky that “language acquisition is governed by universal, underlying grammatical rules that are common to all typically developing humans” has been quickly dominated the field of linguistic and NLP research. However, this idea is challenged by Daniel Everett and his work with Pirahã people in the Amazon.

These are a small (~300) hunter-gatherer tribe living along the banks of the Maici River, a tributary of the Amazon. Everett went there as a missionary to learn their language and translate the Bible into it, but ended up instead losing his own religious faith and living with them for 30 years. He summarizes some of the unusual properties of their language and makes the thesis that these properties constrain their cognition in certain ways. In Everett’s published paper in the journal Current Anthropology, he argues that Pirahã – an Amazonian language unrelated to any other living language – lacked several kinds of words and grammatical constructions that many researchers would have expected to find in all languages. He made it clear that this absence was not due to any inherent cognitive limitation on the part of its speakers, but due to cultural values.

The obvious implication is that all of our cognition is linguistically constrained. Not just subordinate clauses, but also familiar abstractions like a number, “some of” and “all of”, “left” and “right”, and “long ago” appear to be learned and culturally transmitted. These are not immanent built-in ideas, but cultural technologies learnable by our squishy neural nets (though apparently difficult to acquire after childhood plasticity). Such skills aren’t baked into our DNA or implemented in some kind of “reasoning organ” that we are born with. Neither, when we learn them, are they fully general or mechanical— for better and for worse.

Some of us learn to do math and program too, though we’re clearly not implementing those skills “natively” either. Unless very short and simple we need external aids, like paper, pencil, abacus or computer.

Ending

Although it’s hard for us to take it, it’s not true that humans have an inbuilt “reasoning organ” (rather than our version of reasoning being a learned and informal skill). And it is not true that other animals don’t have so much in the way of learning, as there is evidence of social learning from translocated animals. Not all animals are simply powered by instincts. Then, what about machines?

After all, we must always remember that it is not humans who rule over the earth, never a single species, but INTELLIGENCE.

Just as Mt. Fuji is always in view...

Resources:

Corpus Aristotellicum, Περὶ Ψυχῆς, Peri Psychēs (On the Soul)

S. S. Sebastian Echegaray, Simulation of animal behavior using neural networks, 2010

Danie Dennett, From Bacteria to Bach and Back, 2017

H.D. Block, The Perceptron: a model for brain functioning. I, 1962. 2

Richard Gregory, Seeing as thinking: an active theory of perception, 1972

Fordham University the School of Law, Law and New Neuroscience, 2018

McCulloch, Why the Mind is in the Head, 1950

Daniel Everett, Cultural Constraints on Grammar and Cognition among the Piraha, 2005

From a lecture given by Blaise Agüera y Arcas